CASE 1 CONTINUED Call to 911

Fortunately for Ms. S, a friend who read her Facebook posts called 911; even then, however, 16 hours passed between the initial postings and the patient’s arrival at the ED. When emergency medical services brought Ms. S to the Comprehensive Psychiatry Emergency Program, she acknowledged suicidal ideation without an active plan. Other symptoms included depressed mood, a sense of hopelessness, feelings of worthlessness lasting >2 months, low self-esteem, dissatisfaction with body image, and a recent verbal altercation with a friend.

Ms. S was admitted to the inpatient unit for further observation and stabilization.

CASE 2 CONTINUED No one answered her calls

Ms. B, who did not arrive at the ED until 2 days after her suicidal posts, corroborated the history given by her mother. She also reported that she had attempted to reach out to her friends for support, but no one had answered her phone calls. She felt hurt because of this, Ms. B said, and impulsively ingested the pills.

Ms. B said she regretted the suicide attempt. Nevertheless, in light of her recent attempt and persistent distress, she was admitted to the inpatient unit for observation and stabilization.

Can artificial intelligence help?

There is no effective means of tracking high-risk patients after their first contact with the mental health system, despite the fact that (1) those who attempt suicide are at high risk of subsequent suicide attempts3 and (2) we have the potential to prevent future attempts based on self-expressed online cues. We propose machine learning algorithms—a branch of artificial intelligence—to capture and process suicide notes on Facebook in real time.

Machine learning can be broadly defined as computational methods using experience to improve performance or make accurate predictions. In this context, “experience” refers to past information, typically in the form of electronic data collected and analyzed to design accurate and efficient predictive algorithms. Machine learning, which incorporates fundamental concepts in computer science, as well as statistics, probability, and optimization, already has been established in a variety of applications, such as detecting e-mail spam, natural language processing, and computational biology.13

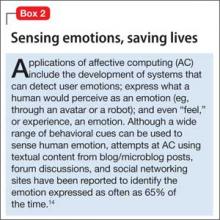

Affective computing, known as emotion-oriented computing, is a branch of artificial intelligence that involves the design of systems and devices that can recognize, interpret, and process human moods and emotions (Box 2).14

Prediction models, developed by Poulin et al15 to estimate the risk of suicide (based on keywords and multiword phrases from unstructured clinical notes from a national sample of U.S. Veterans Administration medical records), resulted in an inference accuracy of ≥65%. Pestian et al16 created and annotated a collection of suicide notes—a vital resource for scientists to use for machine learning and data mining. Machine learning algorithms based on such notes and clinical data might be used to capture alarming social media posts by high-risk patients and activate crisis management, with potentially life-saving results.

But limitations remain

It is not easy to identify or analyze people’s emotions based on social media posts; emotions can be implicit, based on specific events or situations. To distinguish among different emotions purely on the basis of keywords is to deal in great subtlety. Framing algorithms to include multiple parameters—the duration of suicidal content and the number of suicidal posts, for example—would help mitigate the risk of false alarms.

Another problem is that not all Facebook profiles are public. In fact, only 28% of users share all or most of their posts with anyone other than their friends.17 This limitation could be addressed by urging patients identified as being at high risk of suicide during an initial clinical encounter with a mental health provider to “friend” a generic Web page created by the hospital or clinic to protect patients’ privacy.

As Levahot et al10 wrote in their report of the patient whose clinician discovered a patient’s explicitly suicidal Facebook post, the incident “did not hinder the therapeutic alliance.” Instead, the team leader said, the discovery deepened the therapeutic relationship and helped the patient “better understand his mental illness and need for increased support.”

Bottom Line

Machine learning algorithms offer the possibility of analyzing status updates from patients who express suicidal ideation on social media and alerting clinicians to the need for early intervention. There are steps clinicians can take now, however, to take advantage of Facebook, in particular, to monitor and potentially prevent suicide attempts by those at high risk.

Related Resource

• Ahuja AK, Biesaga K, Sudak DM, et al. Suicide on Facebook. J Psychiatr Pract. 2014;20(2):141-146.

Acknowledgement

Zafar Sharif MD, Associate Clinical Professor of Psychiatry, Columbia University College of Physicians and Surgeons, and Director of Psychiatry, Harlem Hospital Center, New York, New York, and Michael Yogman MD, Assistant Clinical Professor of Pediatrics, Harvard Medical School, Boston Children’s Hospital, Boston, Massachusetts, provided insight into the topic and useful feedback on the manuscript of this article.