Deciphering artificial neural networks

The promise of health care information technology relies heavily on statistical methods and software constructs, including logistic regression, random forest modeling, clustering, and neural networks. The machine learning-enabled image analysis used to detect diabetic retinopathy and to differentiate a malignant melanoma and a normal mole is based on neural networking.

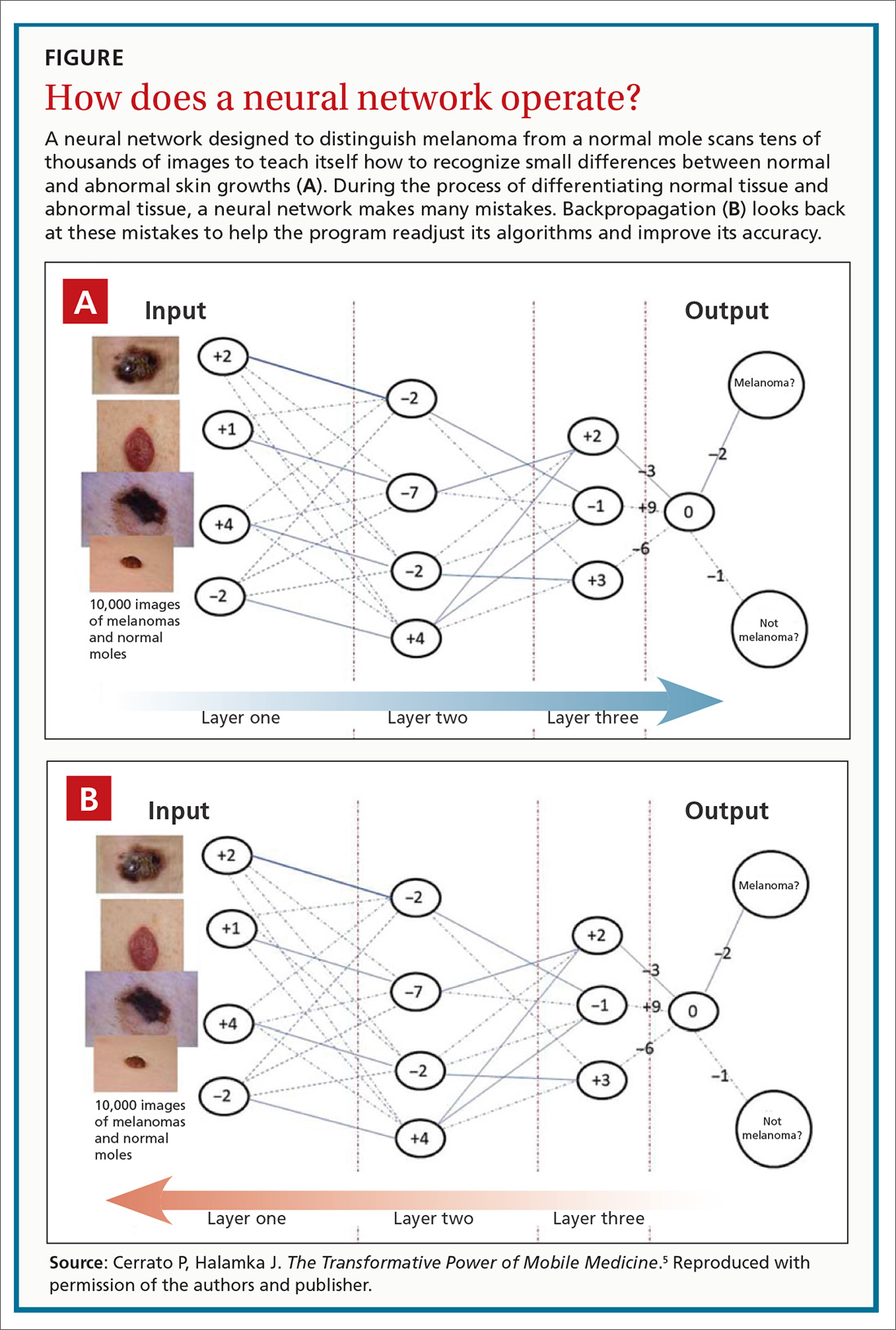

As we discussed in the body of this article, these networks mimic the nervous system, in that they comprise computer-generated “neurons,” or nodes, and are connected by “synapses” (FIGURE5). When a node in Layer 1 is excited by pixels coming from a scanned image, it sends on that excitement, represented by a numerical value, to a second set of nodes in Layer 2, which, in turns, sends signals to the next layer— and so on.

Eventually, the software’s interpretation of the pixels of the image reaches the output layer of the network, generating a negative or positive diagnosis. The initial process results in many interpretations, which are corrected by a backward analytic process called backpropagation. The video tutorials mentioned in the main text provide a more detailed explanation of neural networking.

Using an area-under-the-receiver operating curve (AUROC) as a metric, and choosing an operating point for high specificity, the algorithm generated sensitivity of 87% and 90.3% and specificity of 98.1% and 98.5% for 2 validation data sets for detecting referable retinopathy, as defined by a panel of at least 7 ophthalmologists. When AUROC was set for high sensitivity, the algorithm generated sensitivity of 97.5% and 96.1% and specificity of 93.4% and 93.9% for the 2 data sets.

These results are impressive, but the researchers used a retrospective approach in their analysis. A prospective analysis would provide stronger evidence.

That shortcoming was addressed by a pivotal clinical trial that convinced the US Food and Drug Administration (FDA) to approve the technology. Michael Abramoff, MD, PhD, at the University of Iowa Department of Ophthalmology and Visual Sciences and his associates6 conducted a prospective study that compared the gold standard for detecting retinopathy, the Fundus Photograph Reading Center (of the University of Wisconsin School of Medicine and Public Health), to an ML-based algorithm, the commercialized IDx-DR. The IDx-DR is a software system that is used in combination with a fundal camera to capture retinal images. The researchers found that “the AI system exceeded all pre-specified superiority endpoints at sensitivity of 87.2% ... [and] specificity of 90.7% ....”

Continue to: The FDA clearance statement...